MPI Communications¶

Point-to-point Communication¶

Point-to-point communication is a way that pair of processors transmits the data between one another, one processor sending, and the other receiving. MPI provides SEND(MPI_Send) and RECEIVE(MPI_Recv) functions that allow point-to-point communication taking place in a communicator.

MPI_Send(void* message, int count, MPI_Datatype datatype, int destination, int tag, MPI_Comm comm)

- message: initial address of the message

- count: number of entries to send

- datatype: type of each entry

- destination: rank of the receiving process

- tag: message tag is a way to identify the type of a message

- comm: communicator (MPI_COMM_WORLD)

MPI_Recv(void* message, int count, MPI_Datatype datatype, int source, int tag, MPI_Comm comm, MPI_Status *status)

- source: rank of the sending process

- status: return status

Note

To read more on MPI_Status, please read MPI_Status.

MPI Datatype¶

In most MPI functions, which you will be using, require you to specify the datatype of your message. Below is the table showing the corresponding datatype between MPI Datatype and C Datatype.

| MPI Datatype | C Datatype |

|---|---|

| MPI_CHAR | signed char |

| MPI_SHORT | signed short int |

| MPI_INT | signed int |

| MPI_LONG | signed long int |

| MPI_UNSIGNED_CHAR | unsigned char |

| MPI_UNSIGNED_SHORT | unsigned short int |

| MPI_UNSIGNED | unsigned int |

| MPI_UNSIGNED_LONG | unsigned long int |

| MPI_FLOAT | float |

| MPI_DOUBLE | double |

| MPI_LONG_DOUBLE | long double |

Table 1: Corresponding datatype between MPI and C

Example 2: Send and Receive Hello World¶

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 | #include <stdio.h>

#include "mpi.h"

#define FROM_MASTER 1

int main(int argc, char ** argv[]) {

int rank, nprocs;

char message[12] = "Hello, world";

/* status for MPI_Recv */

MPI_Status status;

/* Initialize MPI execution environment */

MPI_Init(&argc, &argv);

/* Determines the size of MPI_COMM_WORLD */

MPI_Comm_size(MPI_COMM_WORLD, &nprocs)

/* Give each process a unique rank */

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

/* If the process is the master */

if ( rank == 0 )

/* Send message to process whose rank is 1 in the MPI_COMM_WORLD */

MPI_Send(&message, 12, MPI_CHAR, 1, FROM_MASTER, MPI_COMM_WORLD);

/* If the process has rank of 1 */

else if ( rank == 1 ) {

/* Receive message sent from master */

MPI_Recv(&message, 12, MPI_CHAR, 0, FROM_MASTER, MPI_COMM_WORLD, &status);

/* Print the rank and message */

printf("Process %d says : %s\n", rank, message);

}

/* Terminate MPI execution environment */

MPI_Finalize();

}

|

| Comments: | This MPI program illustrates the use of MPI_Send and MPI_Recv functions. Basically, the master sends a message, “Hello, world”, to the process whose rank is 1, and then after having received the message, the process prints the message along with its rank. |

|---|

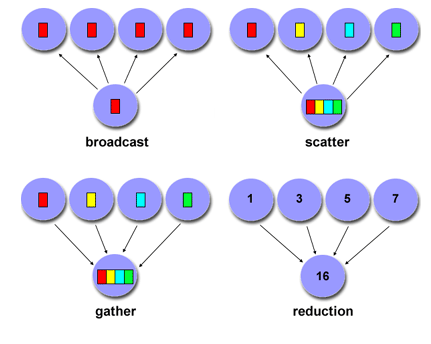

Collective Communication¶

Collective communication is a communication that must have all processes involved in the scope of a communicator. We will be using MPI_COMM_WORLD as our communicator; therefore, the collective communication will include all processes.

Figure 5: Collective Communications Obtained from computing.llnl.gov [1]

MPI_Barrier(comm)

This function creates a barrier synchronization in a commmunicator(MPI_COMM_WORLD). Each task waits at MPI_Barrier call until all other tasks in the communicator reach the same MPI_Barrier call.

MPI_Bcast(&message, int count, MPI_Datatype datatype, int root, comm)

This function displays the message to all other processes in MPI_COMM_WORLD from the process whose rank is root.

MPI_Reduce(&message, &receivemessage, int count, MPI_Datatype datatype, MPI_Op op, int root, comm)

This function applies a reduction operation on all tasks in MPI_COMM_WORLD and reduces results from each process into one value. MPI_Op includes for example, MPI_MAX, MPI_MIN, MPI_PROD, and MPI_SUM, etc.

Note

To read more on MPI_Op, please read MPI_Op.

MPI_Scatter(&message, int count, MPI_Datatype, &receivemessage, int count, MPI_Datatype, int root, comm)

This function divides a message into equal contiguous parts and sends each part to each node. The master gets the first part, and the process whose rank is 1 gets the second part, and so on. The number of elements get sent to each worker is specified by count.

MPI_Gather(&message, int count, MPI_Datatype, &receivemessage, int count, MPI_Datatype, int root, comm)

This function collects distinct messages from each task in the communicator to a single task. This function is the reverse operation of MPI_Scatter.

Let’s Try It¶

In the next section we will describe how to compile and run these examples so that you can try them.

References

| [1] | https://computing.llnl.gov/tutorials/mpi/ |