4.1. Message Passing Pattern: Key Problem¶

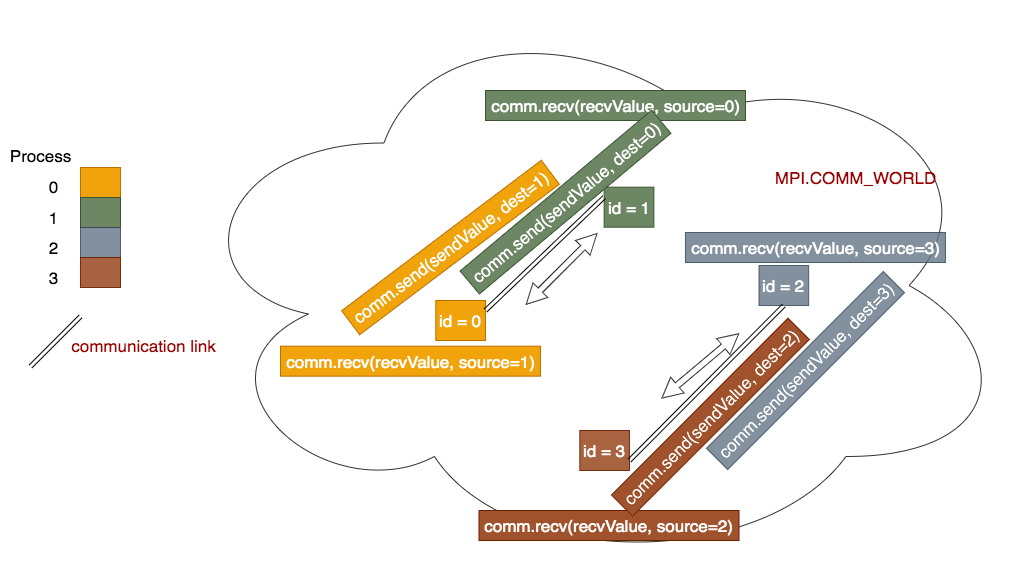

The following code represents a common error that many programmers have inadvertently placed in their code. The concept behind this program is that we wish to use communication between pairs of processes, like this:

For message passing to work between a pair of processes, one must send and the other must receive. If we wish to exchange data, then each process will need to perform both a send and a receive. The idea is that process 0 will send data to process 1, who will receive it from process 0. Process 1 will also send some data to process 0, who will receive it from process 1. Similarly, processes 2 and 3 will exchange messages: process 2 will send data to process 3, who will receive it from process 2. Process 3 will also send some data to process 2, who will receive it from process 3.

If we have more processes, we still want to pair up processes together to exchange messages. The mechanism for doing this is to know your process id. If your id is odd (1, 3 in the above diagram), you will send and receive from your neighbor whose id is id - 1. If your id is even (0, 2), you will send and receive from your neighbor whose id is id + 1. This should work even if we add more than 4 processes, as long as the number of processes is divisible by 2.

Warning

There is a problem with the following code called deadlock. This happens when every process is waiting on an action from another process. The program cannot complete. On linux systems such as the Raspberry Pi, type ctrl-c together to stop the program (ctrl means the control key).

Program file: 04messagePassingDeadlock.py

Example usage:

python run.py ./04messagePassingDeadlock.py 4

Here the 4 signifies the number of processes to start up in mpi.

run.py executes this program within mpirun using the number of processes given.

Exercise:

Run this code with 4 processes. Observe the deadlock and stop the program using ctrl-c.

4.1.1. Dive into the code¶

In this code, can you trace what is happening to cause the deadlock?

Each process, regardless of its id, will execute a receive request first. In this model, recv is a blocking function- it will not continue until it gets data from a send. So every process is blocked waiting to receive a message.

from mpi4py import MPI

# function to return whether a number of a process is odd or even

def odd(number):

if (number % 2) == 0:

return False

else :

return True

def main():

comm = MPI.COMM_WORLD

id = comm.Get_rank() #number of the process running the code

numProcesses = comm.Get_size() #total number of processes running

myHostName = MPI.Get_processor_name() #machine name running the code

if numProcesses > 1 and not odd(numProcesses):

sendValue = id

if odd(id):

#odd processes receive from their paired 'neighbor', then send

receivedValue = comm.recv(source=id-1)

comm.send(sendValue, dest=id-1)

else :

#even processes receive from their paired 'neighbor', then send

receivedValue = comm.recv(source=id+1)

comm.send(sendValue, dest=id+1)

print("Process {} of {} on {} computed {} and received {}"\

.format(id, numProcesses, myHostName, sendValue, receivedValue))

else :

if id == 0:

print("Please run this program with the number of processes \

positive and even")

########## Run the main function

main()

Can you think of how to fix this problem?

Since recv is a blocking function, we need to have some processes send first, while others correspondingly recv first from those who send first. This provides coordinated exchanges.

Go to the next example to see the solution.